tensorflow - Why are the models in the tutorials not converging on GPU (but working on CPU)? - Stack Overflow

Slowdown of fault-tolerant systems normalized to the vanilla baseline... | Download Scientific Diagram

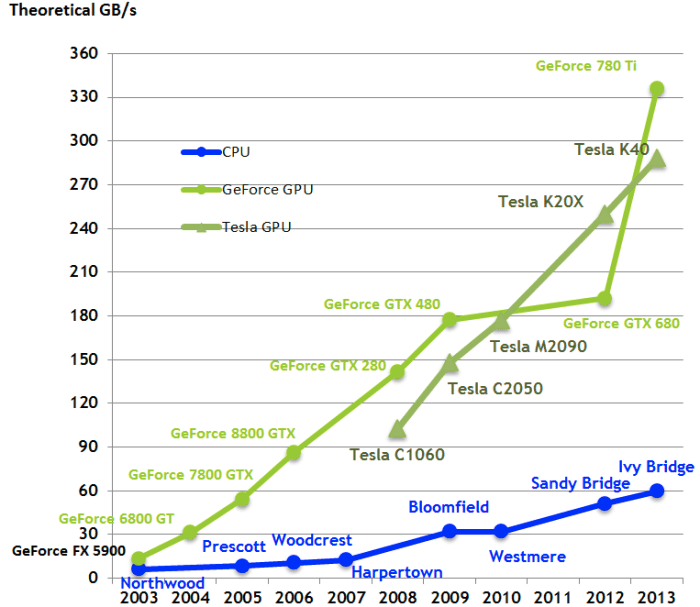

COMPARISON OF GPU AND CPU EFFICIENCY WHILE SOLVING HEAT CONDUCTION PROBLEMS. - Document - Gale Academic OneFile

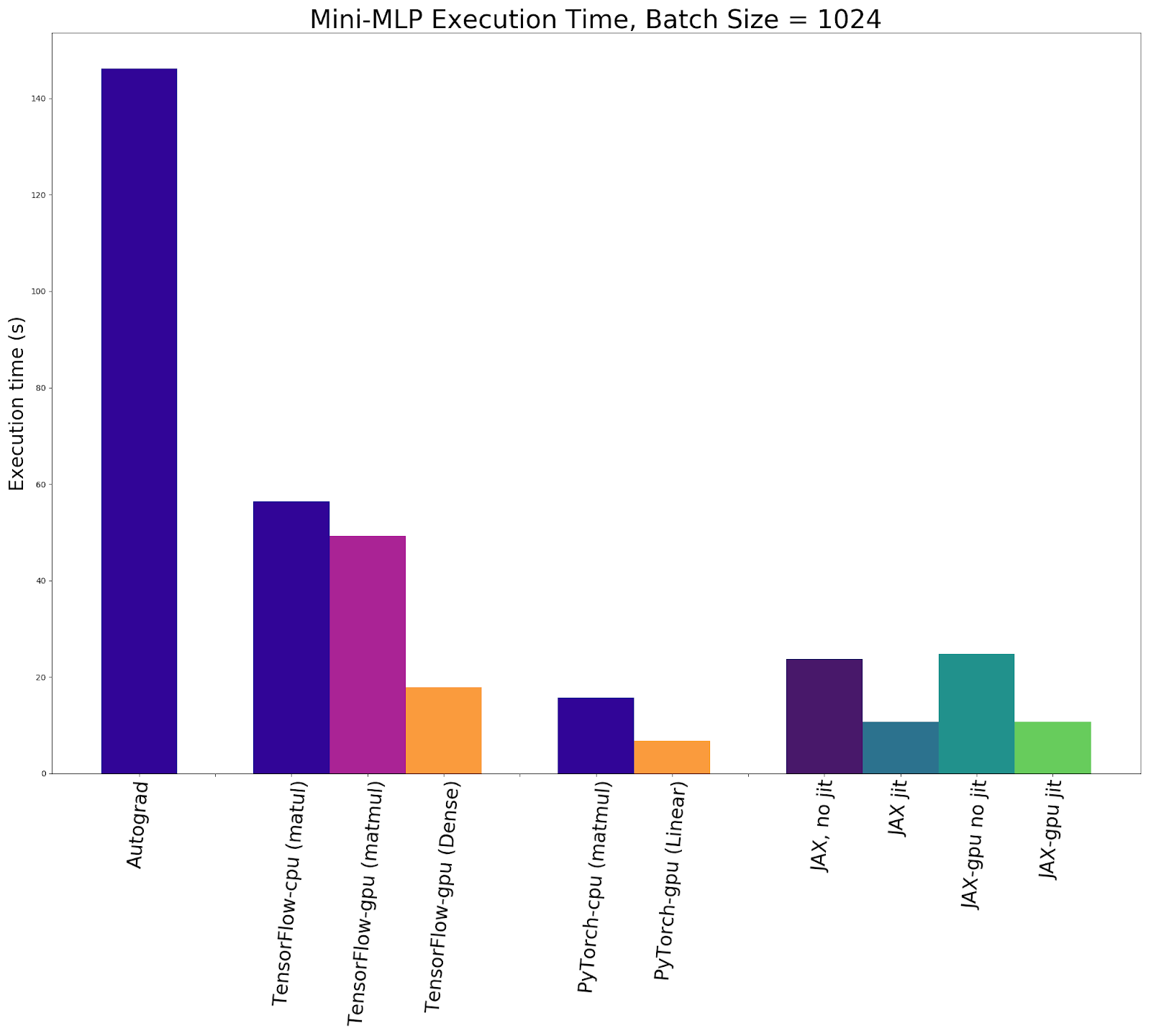

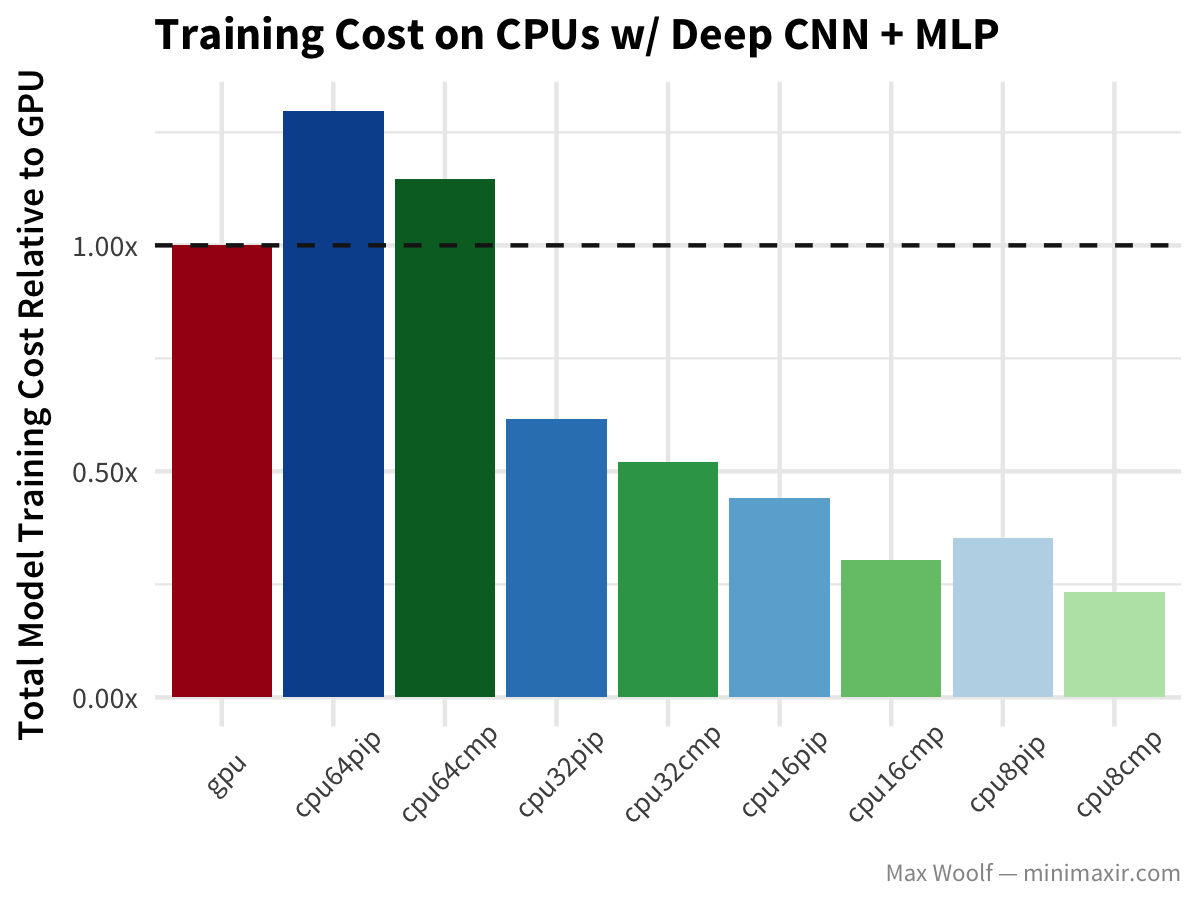

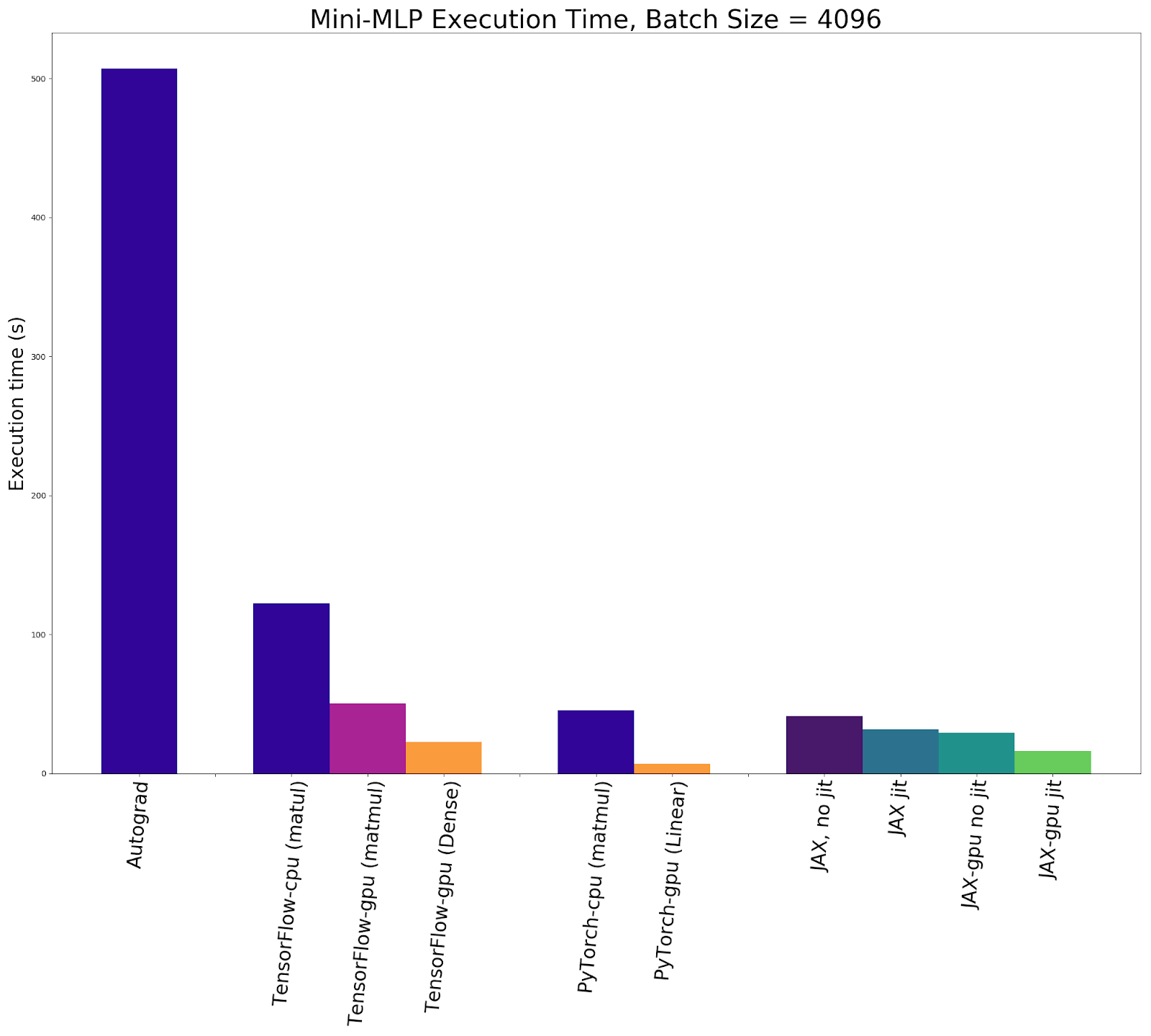

Accelerated Automatic Differentiation with JAX: How Does it Stack Up Against Autograd, TensorFlow, and PyTorch? | Exxact Blog