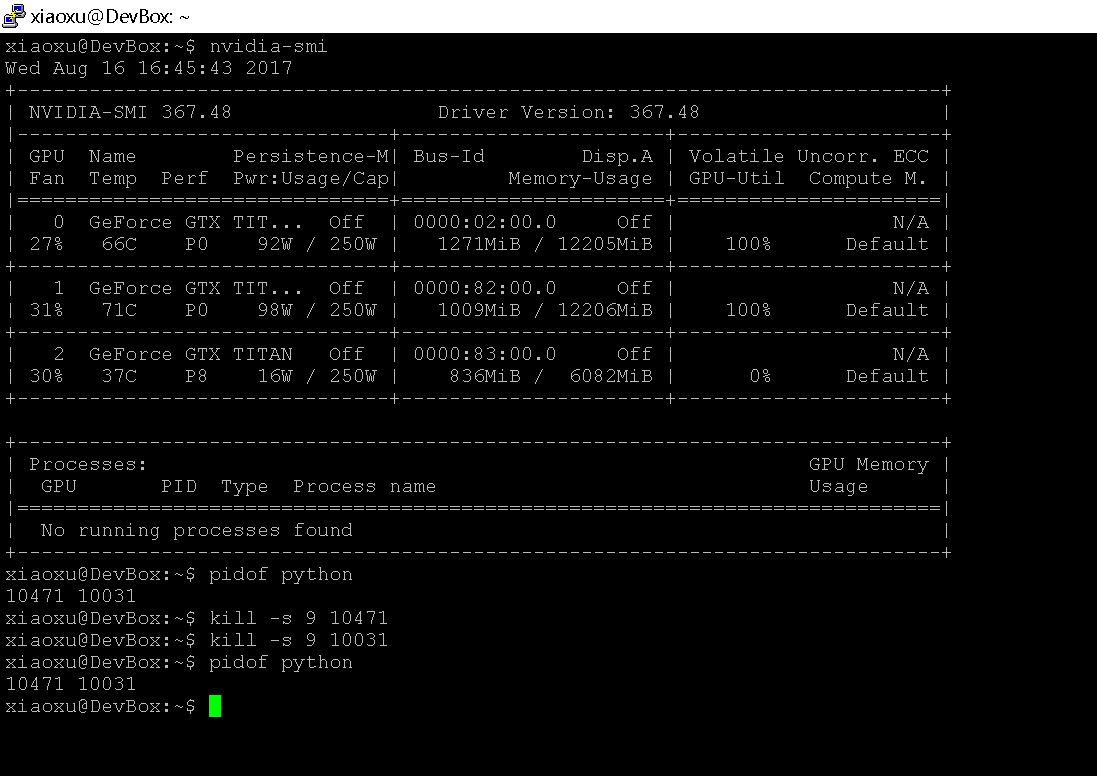

linux - "Graphic card error(nvidia-smi prints "ERR!" on FAN and Usage)" and processes are not killed and gpu not being reset - Super User

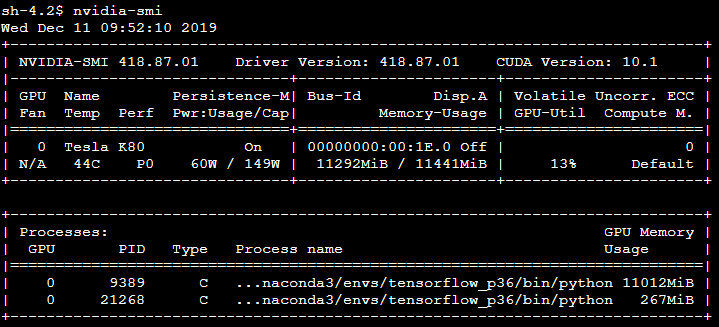

GPU memory is empty, but CUDA out of memory error occurs - CUDA Programming and Performance - NVIDIA Developer Forums

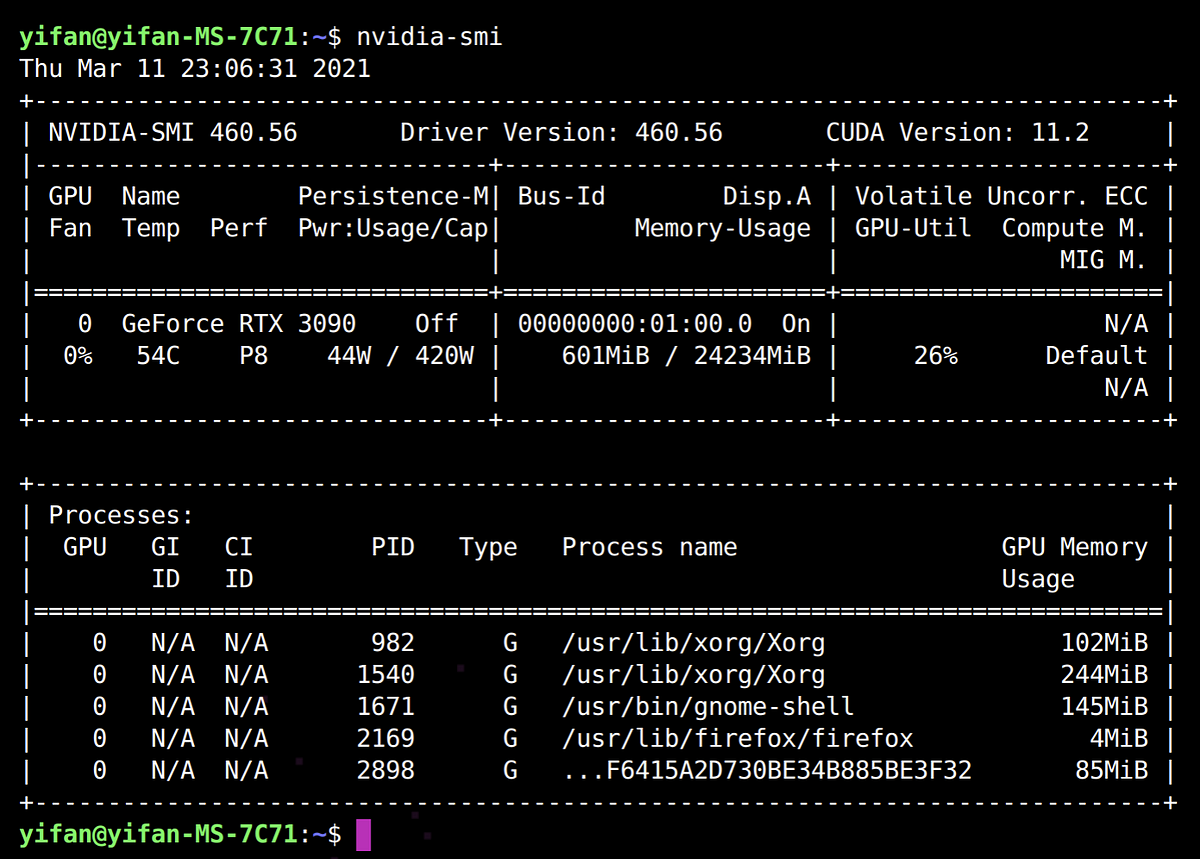

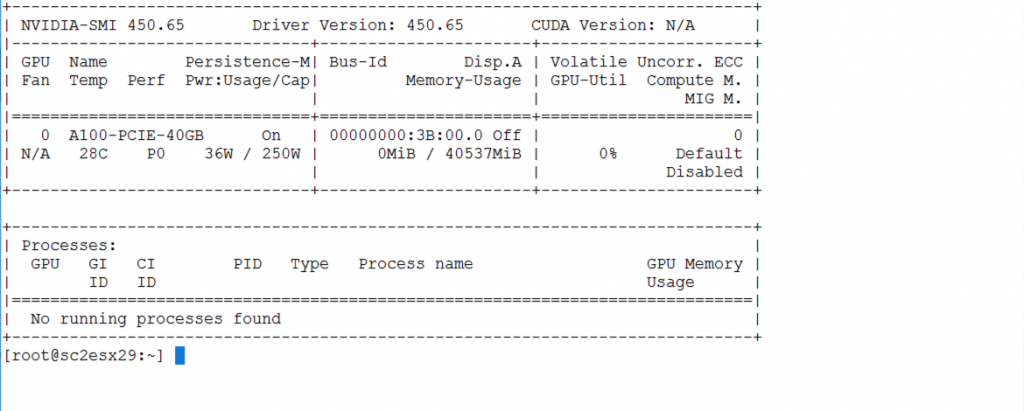

vSphere 7 with Multi-Instance GPUs (MIG) on the NVIDIA A100 for Machine Learning Applications - Part 1: Introduction - Virtualize Applications

Bug: GPU resources not released appropriately when graph is reset & session is closed · Issue #18357 · tensorflow/tensorflow · GitHub

apt - Cuda: NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver - Ask Ubuntu

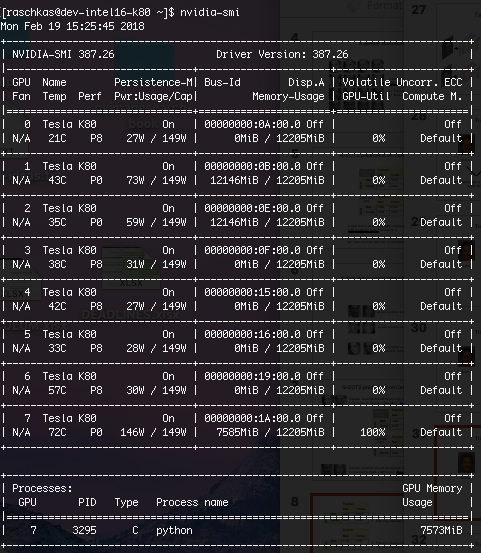

When I shut down the pytorch program by kill, I encountered the problem with the GPU - PyTorch Forums

Nvidia-smi product name error and no cuda capable device - CUDA Setup and Installation - NVIDIA Developer Forums

Install CUDA 11.2, cuDNN 8.1.0, PyTorch v1.8.0 (or v1.9.0), and python 3.9 on RTX3090 for deep learning | by Yifan Guo | Analytics Vidhya | Medium

Nvidia-smi shows high global memory usage, but low in the only process - CUDA Programming and Performance - NVIDIA Developer Forums

![Plugin] Nvidia-Driver - Plugin Support - Unraid Plugin] Nvidia-Driver - Plugin Support - Unraid](https://forums.unraid.net/uploads/monthly_2020_11/Bildschirmfoto_2020-11-15_20-17-13.png.4821128ecd7b5eed35f5a259acd1f7cb.png)