Sun Tzu's Awesome Tips On Cpu Or Gpu For Inference - World-class cloud from India | High performance cloud infrastructure | E2E Cloud | Alternative to AWS, Azure, and GCP

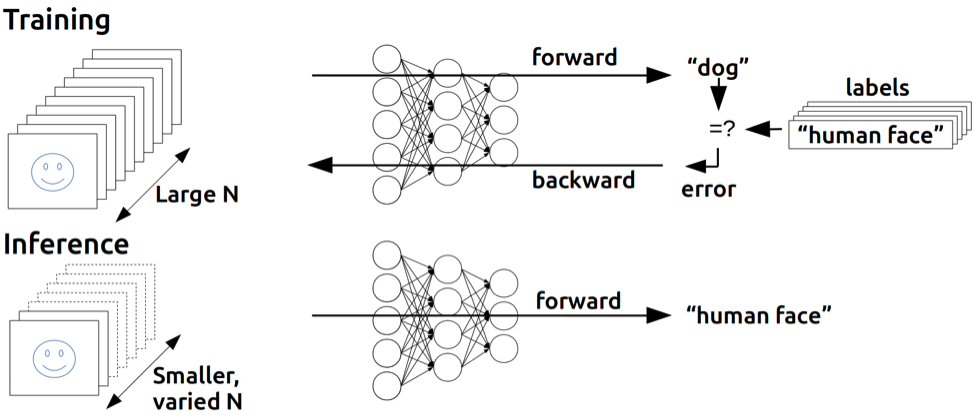

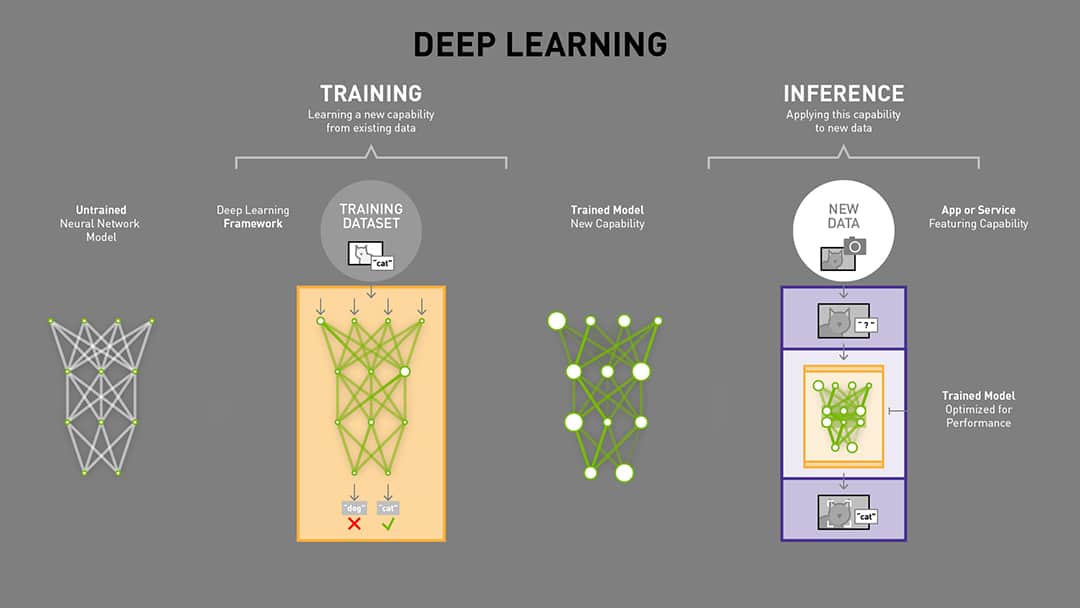

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science

Neousys Ruggedized AI Inference Platform Supporting NVIDIA Tesla and Intel 8th-Gen Core i Processor - CoastIPC

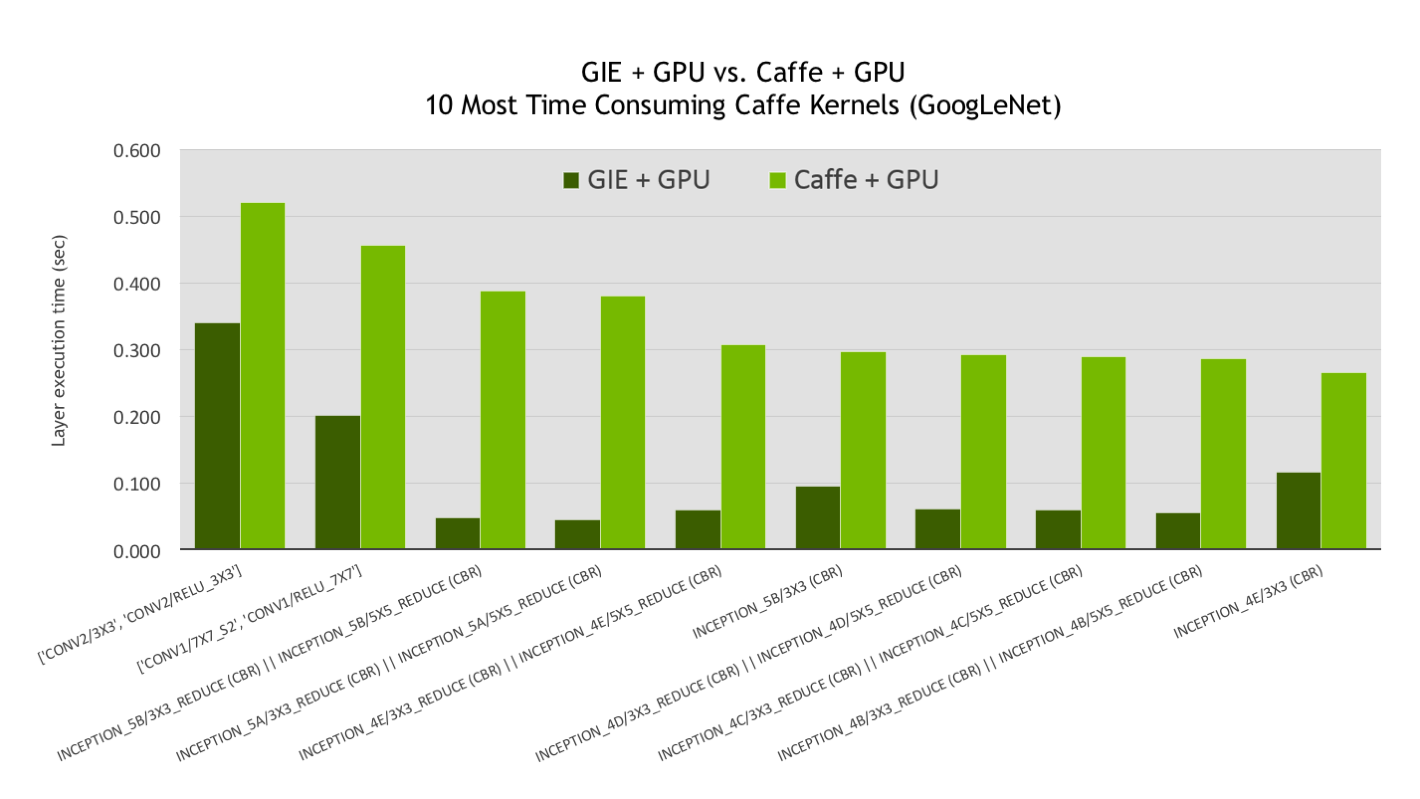

GPU-Accelerated Inference for Kubernetes with the NVIDIA TensorRT Inference Server and Kubeflow | by Ankit Bahuguna | kubeflow | Medium

A comparison between GPU, CPU, and Movidius NCS for inference speed and... | Download Scientific Diagram

FPGA-based neural network software gives GPUs competition for raw inference speed | Vision Systems Design