Python API Transformer.from_pretrained support directly to load on GPU · Issue #2480 · facebookresearch/fairseq · GitHub

How to dedicate your laptop GPU to TensorFlow only, on Ubuntu 18.04. | by Manu NALEPA | Towards Data Science

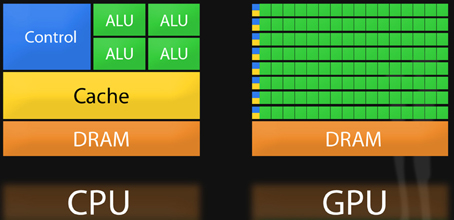

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

cifar10 train no gpu utilization, full gpu memory usage, system cpu full loading · Issue #7339 · tensorflow/models · GitHub

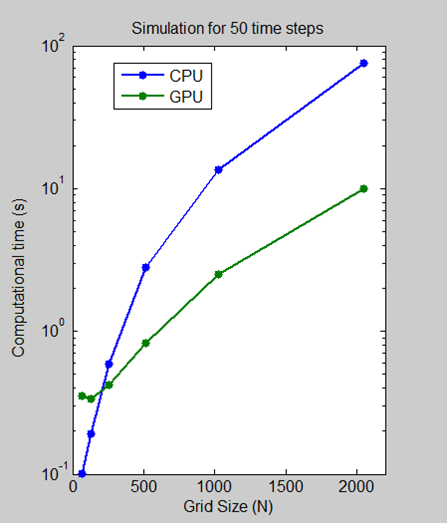

3.1. Comparison of CPU/GPU time required to achieve SS by Python and... | Download Scientific Diagram

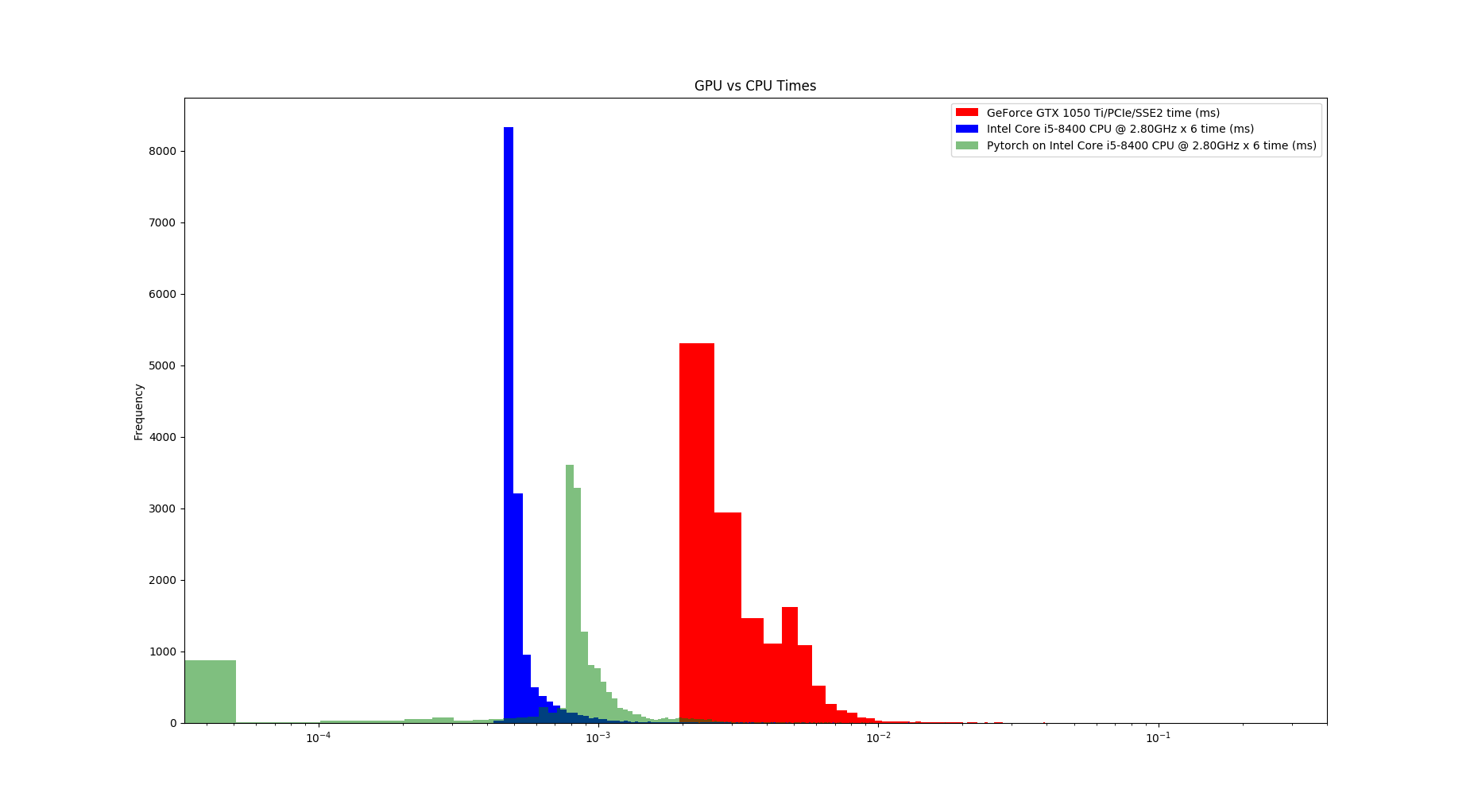

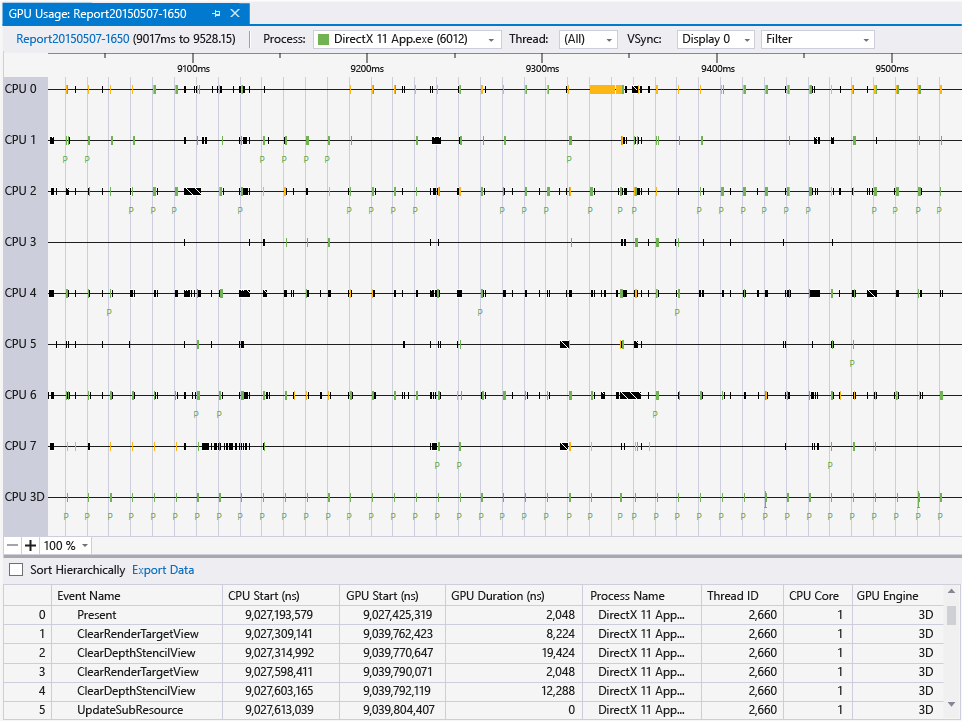

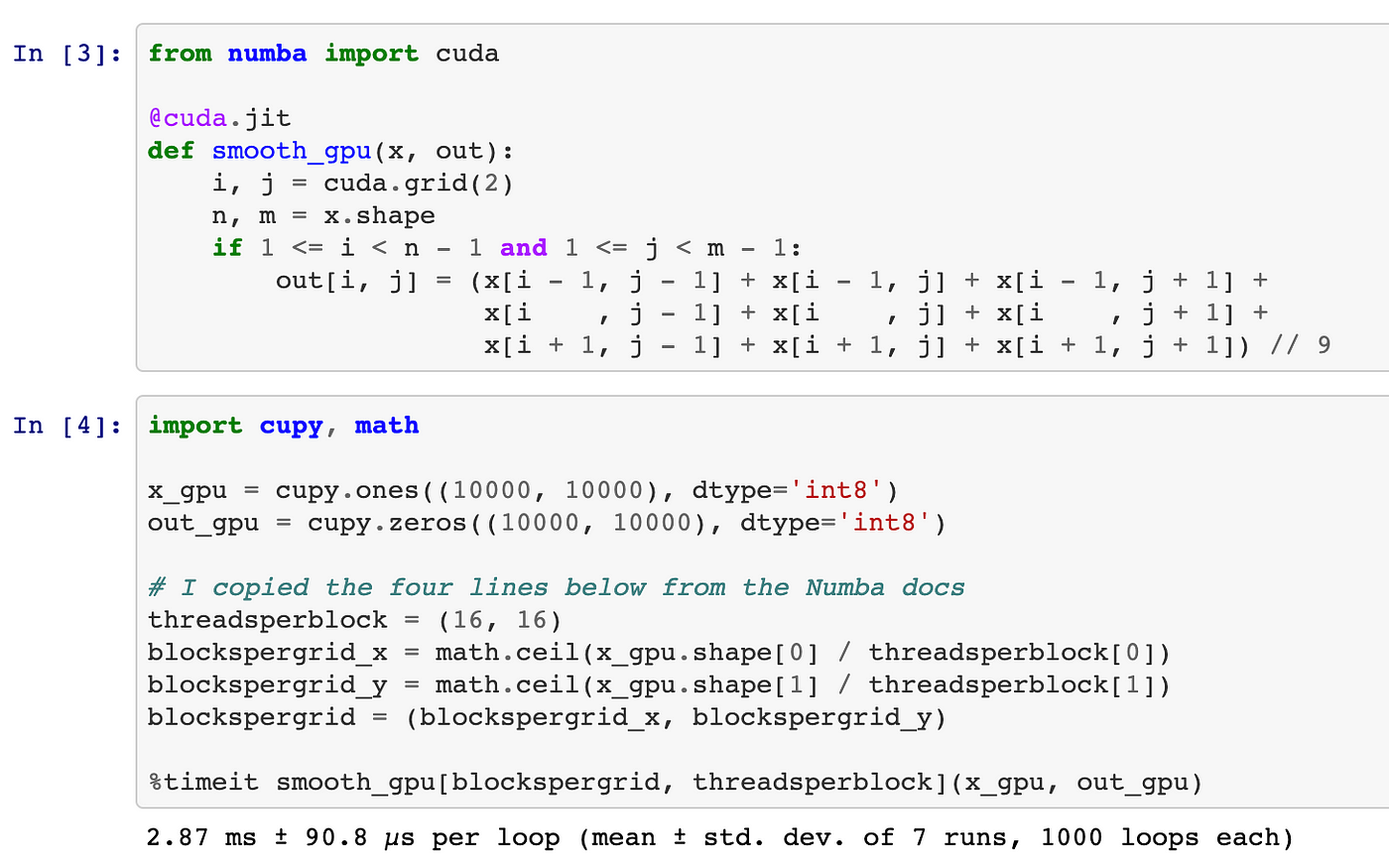

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

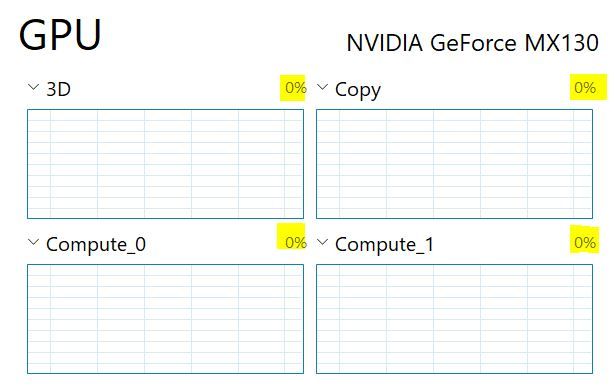

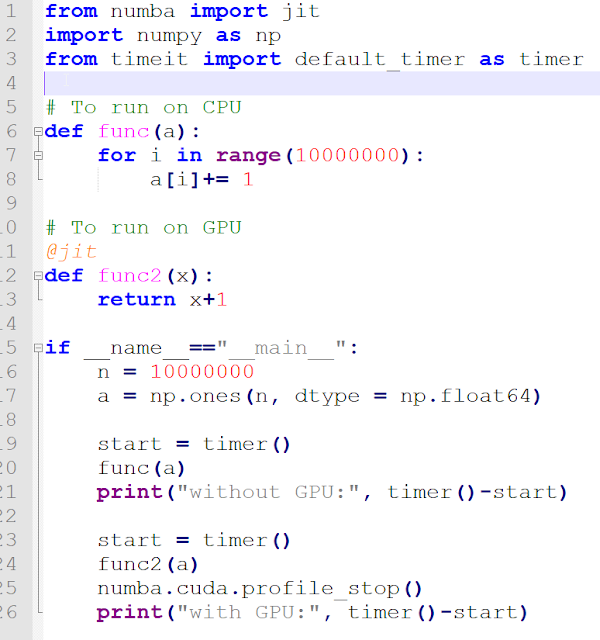

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium